When managing an enterprise site, it can be easy to lose track of the overall architecture of your pages.

Unfortunately, not stepping back once in a while and looking at the bigger picture can lead to your end product causing confusion for both users and search engines.

Considering the overall structure of your site is vital, and you also want to dig deeper and ensure that each page is mapped out correctly.

Not considering data classification can hurt your SEO potential – most notably, search engines’ ability to effectively index your site.

We have compiled the six most common data classification mistakes our clients are making, along with the impact they could have and how to combat them.

1. Having poorly-defined core topics

Before you can even begin analysing your site architecture and identifying areas which need remapping, stakeholders must be aligned with what the core business focuses are. This means understanding what the main offering of the brand is, so that a dominant portion of the core product or service offering is easily navigable from the site architecture.

Sites which spread themselves too thin, offering fractured levels of content on a wide range of topics, will not adhere well with Google’s E-E-A-T criteria. Defining core topics is the foundational block which must be decided ahead of building out strong topic clusters, site taxonomy and interlinking.

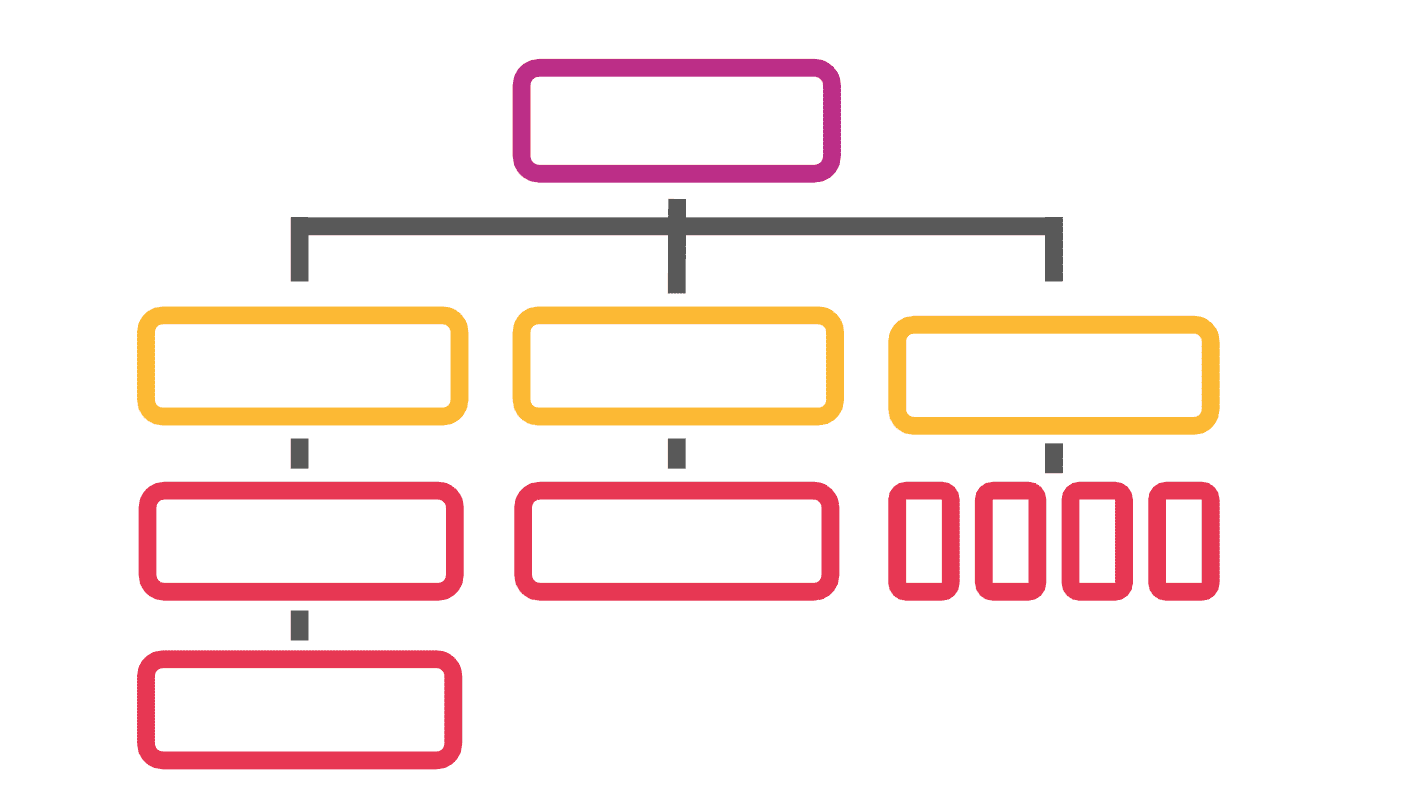

2. Choosing the wrong taxonomy

There are multiple taxonomy types which websites can utilise, but using a model which is inefficient for your website will result in a confusing structure for Google and users.

Enterprise websites will generally not use a flat taxonomy, as these label all subcategories as top-level categories. It is common for large sites to use hierarchical taxonomies, which feature different levels of subcategories inside subcategories.

When using this model, it is important to remember that the number of levels per hierarchy should be kept low to improve content findability. Otherwise, users will get lost on your website and search engines are less likely to index large sections of your website.

If your site covers a large scope of content and specialisms, a network taxonomy will work well – just remember to put plenty of work into your URL and hierarchical structures, and consider regular link audits to ensure your internal linking remains on point.

Review and e-commerce sites typically use facet taxonomies. Content categories are divided based on the attribute of products being sold.

Don’t forget that taxonomy optimisation is a continuous process. If content is not performing, or user journeys stop at specific points, findability and navigational roadblocks could be an issue, in which case your taxonomy could need a restructure.

3. Not managing your category and site structures optimally

Tagging and categorising content in the CMS will be an obvious job for SEOs, but ensuring the overall structure is being optimally managed for navigability is often overlooked. When lots of people are uploading content to a site, with archives dating back years, it’s easy for categorisation and structural errors to fall through the gaps.

For example, you may inadvertently have duplicate tags and categories which can confuse search engines. There could be single and plural variations of categories which have been created over time and not tidied up. Creating optimised categories and complimentary tags for grouped content ensures that your site has a clear hierarchical structure, is easily navigable and a doddle to crawl.

4. Not mapping the purpose of each page

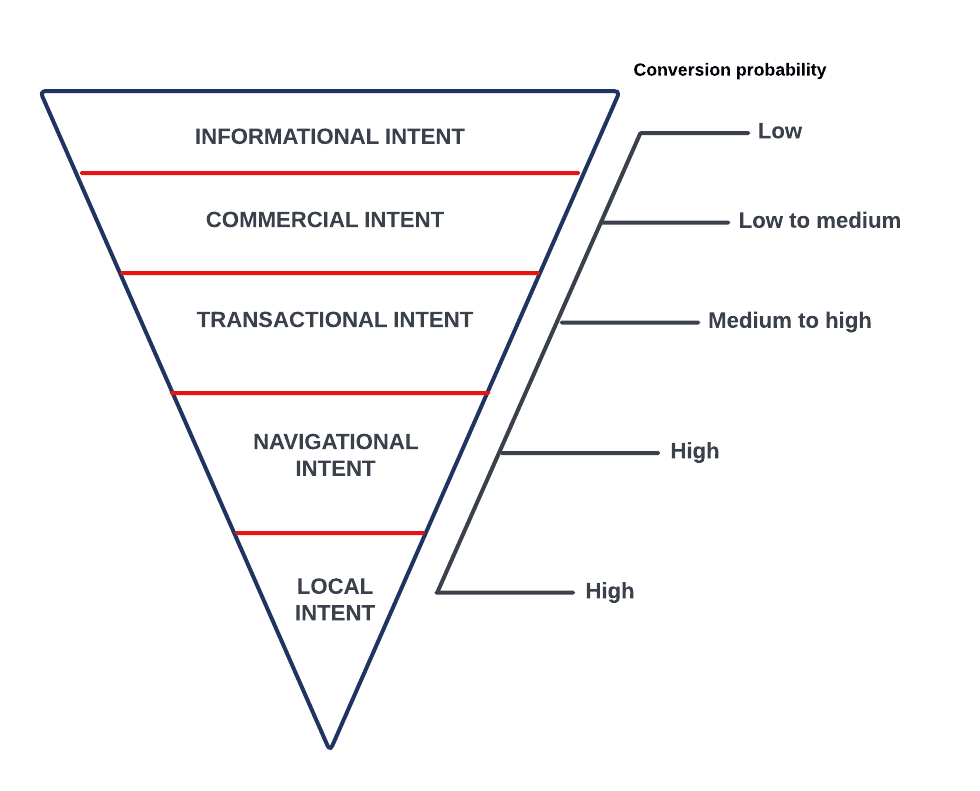

Every user landing on your website has a specific goal in mind. They may want to learn about a topic, or they could be seeking a specific page. Alternatively, they could be focused on completing a transaction.

Each page on your website needs to have a specific user purpose in mind. Generally speaking, you want to target informational, commercial and transactional intent, and certain businesses may want to drill down into commercial and localised intent also.

The most common search intent is informational, and while you will get the most organic traffic by creating informational content, the conversion rate likelihood is lowest. Commercial intent has a low to medium conversion rate likelihood, and transactional intent has a medium to high likelihood.

Ensuring your pages have been mapped to the relevant purpose is vital. On a basic level, ensuring product-focused pages contain transactional-intent content and blog content targets informational intent keywords is key.

On a more granular level, performing a content audit to identify which pages need to be remapped for the most appropriate user intent is key for targeting the right ratio of organic site traffic – and those visitors which are ready to convert.

5. Leaving pages with intent mismatch

It’s easy for enterprise sites to contain hundreds or thousands of pages which are not matched to user intent. Unfortunately, these pages will not rank in the SERPs until you perform a mismatch analysis and remap the content.

When search engines crawl sites and match URLs to search terms, ranking them in the SERPs accordingly, the top results will be those which match the user intent for the term.

How do search engines do this? They analyse the competitive landscape for the search term and assess the types of content which are most popular with users. From there, they will prioritise those pages with the user intent which matches the search query.

If your content has been optimised for a search term, but not for the user intent reflected in SERPs, your page is much less likely to rank well.

6. Not using entities to optimise for snippets

An entity is a definable and unique term which is not limited to a specific concept. For example, ‘automobile’, ‘vehicle’ and ‘auto’ represent the same entity, as do different spellings such as ‘car’ vs ‘kar’ and images of different car models, including symbols such as the silhouette of a car.

When you’re writing about a specific topic, performing a SERP analysis will help you to identify what the search engine identifies as the ideal answer for the entity. For example, you can identify the type of snippet given priority in the SERP, along with alternative definitions under the knowledge panel or ‘people also ask’.

By optimising content topics for entity SEO and targeting featured snippets, crawlers will be more likely to prioritise your pages in the SERPs.

How Skittle Digital can help

We can work with you to remap your site architecture with minimal risk of organic ranking loss, and help you identify an optimal taxonomy for your enterprise site structure.

We can also drill down into your page architecture, performing a user intent audit and helping you to remap content currently suffering from intent mismatch.

As part of Skittle Digital’s Free Acquisitions Workshop, we uncover the strengths and weaknesses in your site’s data classification. There are a limited number of workshops available each month and we book up quickly. Click here to book yours today.